Our experiments aim to prove concepts that will make AR experiences feel intuitive and effortless

*

AR for Louvre Abu Dhabi 03

At around, we see huge potential in combining AR with AI in a museum environment. AI — tells.AR — shows.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

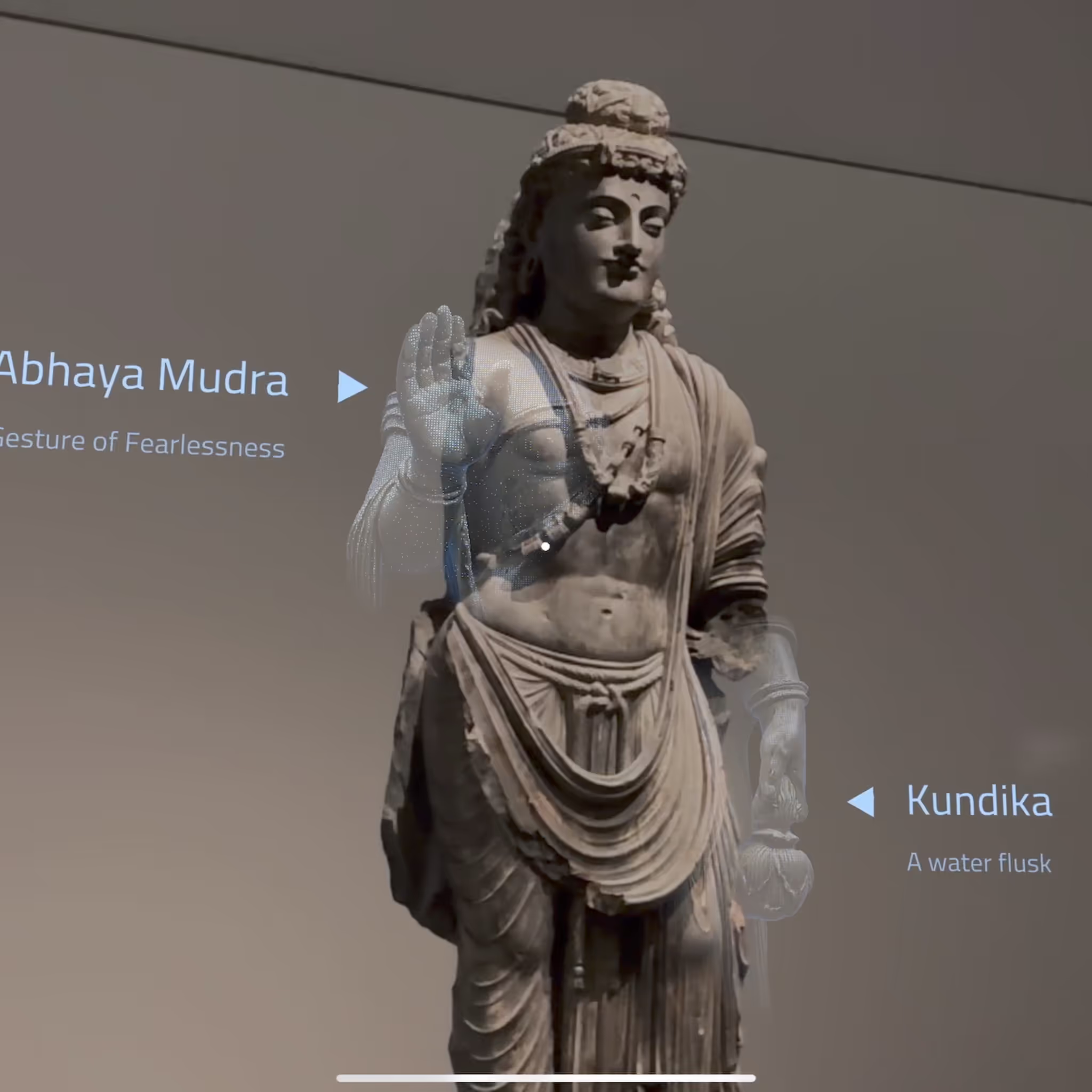

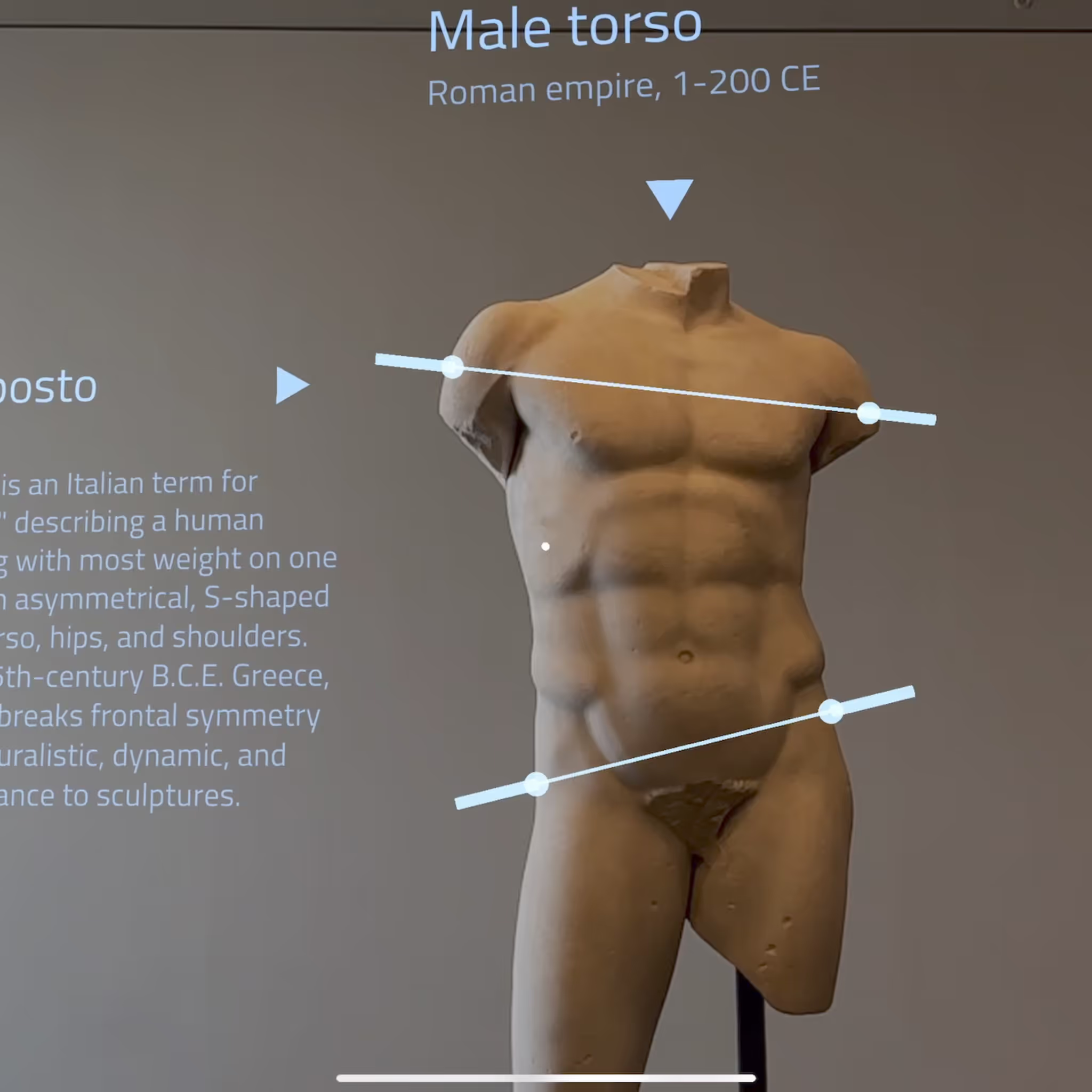

At around, we see huge potential in combining AR with AI in a museum environment. AI — tells.AR — shows.

*

AR for Louvre Abu Dhabi 02

Continuing the “AI — tells, AR — shows” theme within the Louvre Abu Dhabi, here’s an example of how simple graphics can explain something faster and more clearly. And such graphics are revealed based on the visitor’s proximity to the subject. At around we love finding spatial interaction patterns that feel seamless and bring convenience.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Continuing the “AI — tells, AR — shows” theme within the Louvre Abu Dhabi, here’s an example of how simple graphics can explain something faster and more clearly. And such graphics are revealed based on the visitor’s proximity to the subject. At around we love finding spatial interaction patterns that feel seamless and bring convenience.

*

AR for Louvre Abu Dhabi 01

At around, we see huge potential in combining AR with AI in a museum environment.

AI — tells.

AR — shows.

In this prototype we made, labels and hieroglyphs can be translated into any language, and the visual selection adds clarity to the experience.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

At around, we see huge potential in combining AR with AI in a museum environment.AI — tells.AR — shows.In this prototype we made, labels and hieroglyphs can be translated into any language, and the visual selection adds clarity to the experience.

*

Hologram Shader Unity Breakdown

We raised an ethical question: for an AR app, should we use a realistic shader that matches the lighting of the actual sculpture’s surface, or choose an intentionally “artificial” look, so we’re not imitating the real material and condition of the object, but instead carefully presenting a hypothesis of what it might have looked like with restored missing parts.

In the end, I built a shader with a mode switch: Normal / Screen (Blend Mode), plus additional effects that further visually separate the AR layer from the original sculpture.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

We raised an ethical question: for an AR app, should we use a realistic shader that matches the lighting of the actual sculpture’s surface, or choose an intentionally “artificial” look, so we’re not imitating the real material and condition of the object, but instead carefully presenting a hypothesis of what it might have looked like with restored missing parts.In the end, I built a shader with a mode switch: Normal / Screen (Blend Mode), plus additional effects that further visually separate the AR layer from the original sculpture.

*

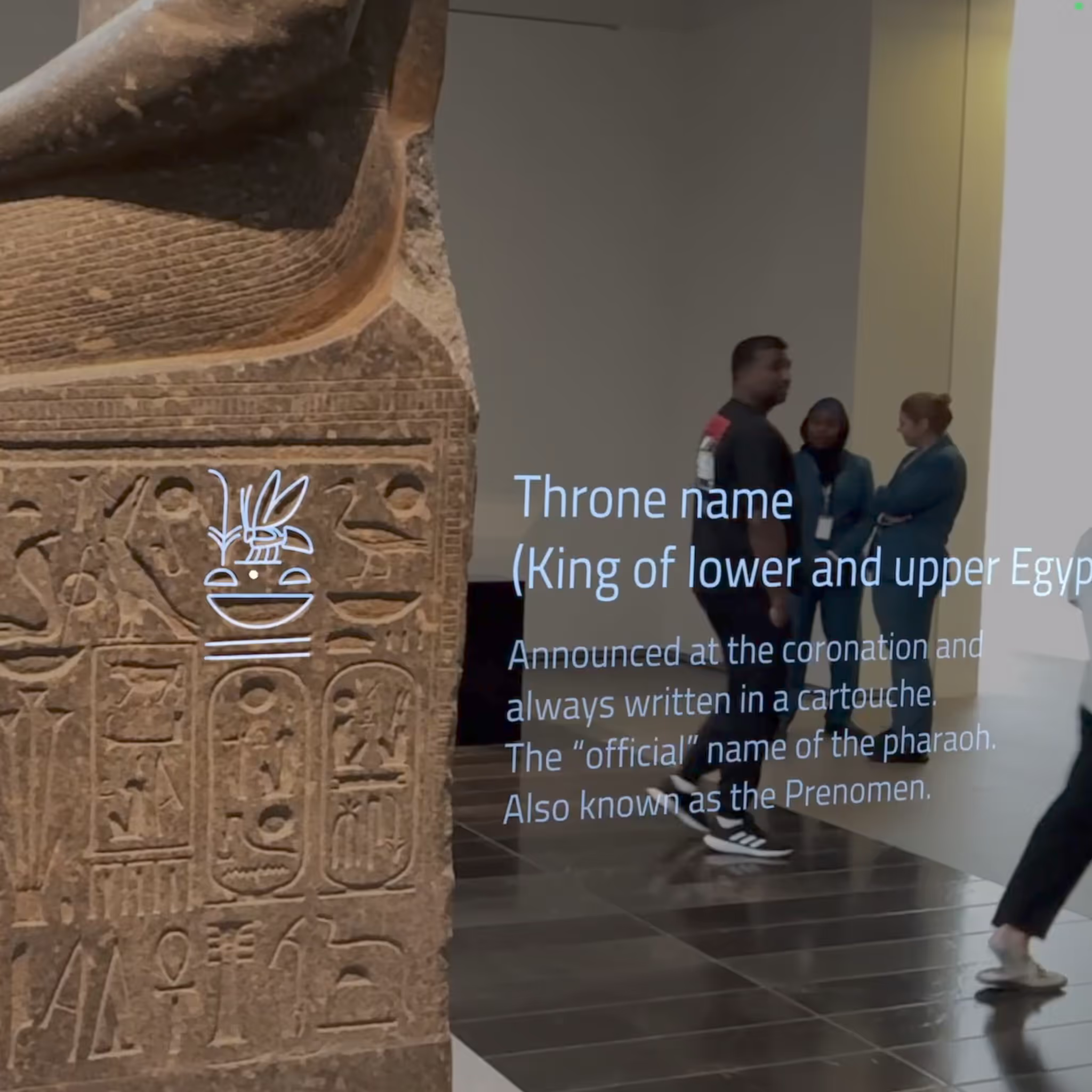

GEM AR experience Prototype

As ambassadors of bringing XR into all areas of human life, couldn’t ignore last year’s biggest museum event: the opening of The Grand Egyptian Museum. In just a few days in Egypt, we built a working prototype of an app that interacts with museum objects and helps visitors make sense of patterns, artifacts, and Egyptian symbolism. What’s more, we managed to test it right inside the museum.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

As ambassadors of bringing XR into all areas of human life, couldn’t ignore last year’s biggest museum event: the opening of The Grand Egyptian Museum.

In just a few days in Egypt, we built a working prototype of an app that interacts with museum objects and helps visitors make sense of patterns, artifacts, and Egyptian symbolism. What’s more, we managed to test it right inside the museum.

*

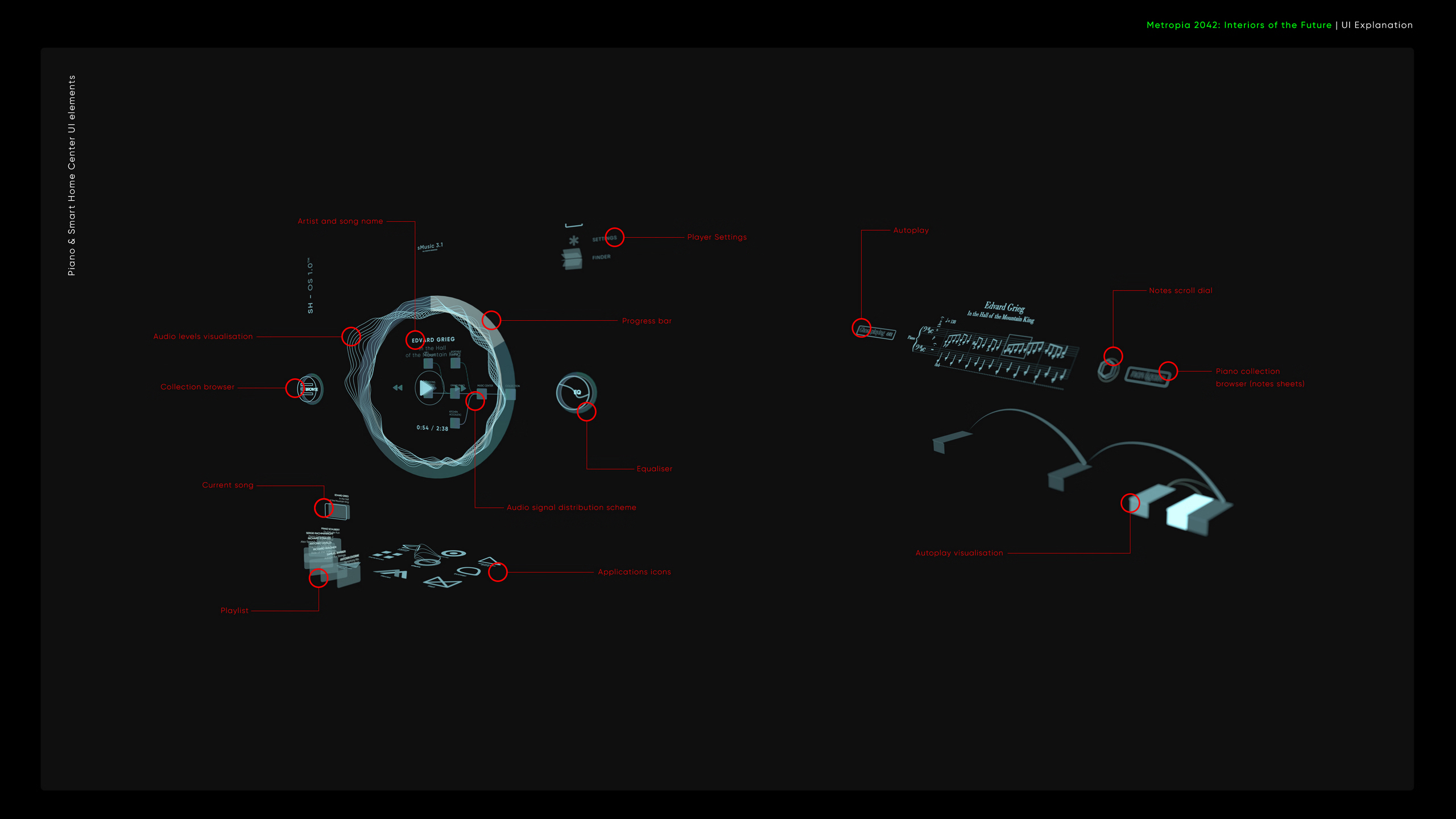

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Our concept envisions a smart home interior integrated with AR interfaces, seamlessly blending technology with daily life. The design explores how augmented reality could enhance both functionality and aesthetics in modern living spaces, creating an environment that is innovative, intuitive, and forward-thinking.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Our concept envisions a smart home interior integrated with AR interfaces, seamlessly blending technology with daily life. The design explores how augmented reality could enhance both functionality and aesthetics in modern living spaces, creating an environment that is innovative, intuitive, and forward-thinking.

*

Additional Layer

The idea of blending the natural with the technological has long been one of our core explorations. In this project, we studied how plants could “communicate” with humans through augmented-reality interfaces. Our goal was to create visual systems that reflect the organic rhythms and forms of the plants themselves, translating their behavior into a clear and sensory AR language.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

The idea of blending the natural with the technological has long been one of our core explorations. In this project, we studied how plants could “communicate” with humans through augmented-reality interfaces. Our goal was to create visual systems that reflect the organic rhythms and forms of the plants themselves, translating their behavior into a clear and sensory AR language.

*

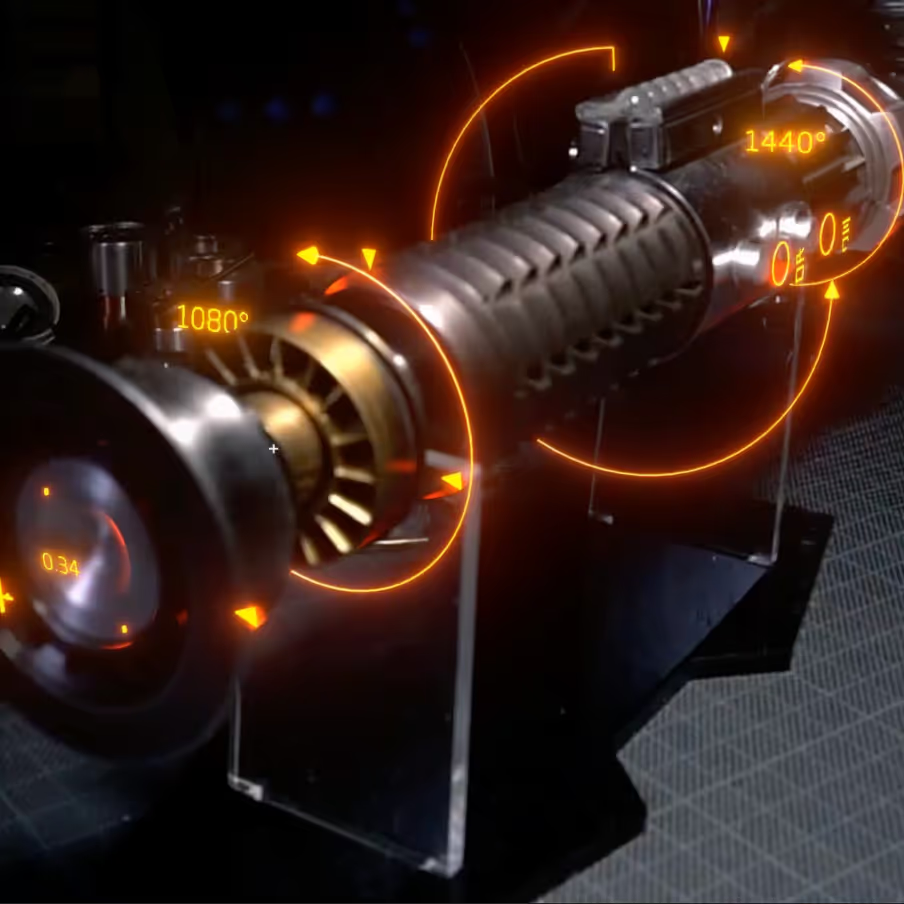

Lightsaber study 01

This experiment explores which UI elements a saber hilt can include to support maintenance. The gaze-based interaction has three stages: when the object is out of sight, no UI appears; when it’s centered in view, subtle hints show up; and at closer focus, the full UI is revealed. A subtle but technically complex feature is also included—real-time reflections of UI elements on the hilt’s surface, helping the AR layer blend seamlessly with the physical object.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

This experiment explores which UI elements a saber hilt can include to support maintenance. The gaze-based interaction has three stages: when the object is out of sight, no UI appears; when it’s centered in view, subtle hints show up; and at closer focus, the full UI is revealed. A subtle but technically complex feature is also included—real-time reflections of UI elements on the hilt’s surface, helping the AR layer blend seamlessly with the physical object.

*

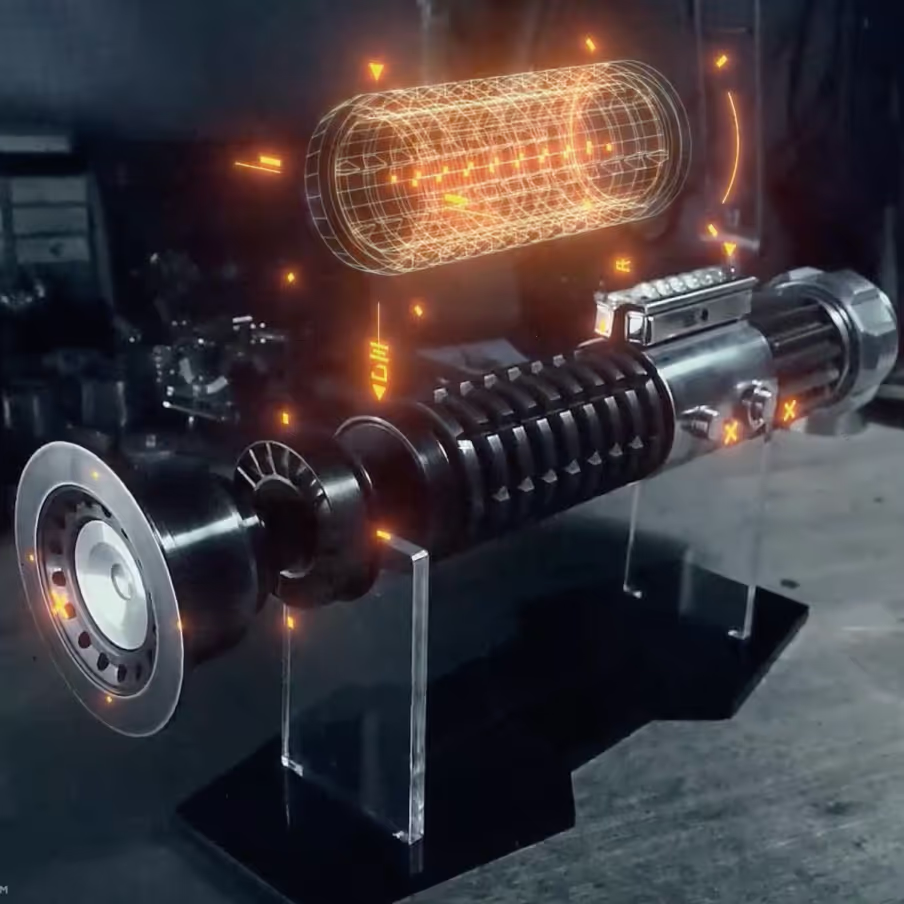

Lightsaber AR UI study 02

This experiment adds visual complexity to the AR layer, making it rich in detail without feeling overloaded. Every part of the hilt can be examined closely, with wireframe overlays and contextual explanations (if you can read Aurebesh!). The same gaze-based interaction model is used — parts are selected by centering them in the frame.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

This experiment adds visual complexity to the AR layer, making it rich in detail without feeling overloaded. Every part of the hilt can be examined closely, with wireframe overlays and contextual explanations (if you can read Aurebesh!). The same gaze-based interaction model is used — parts are selected by centering them in the frame.

*

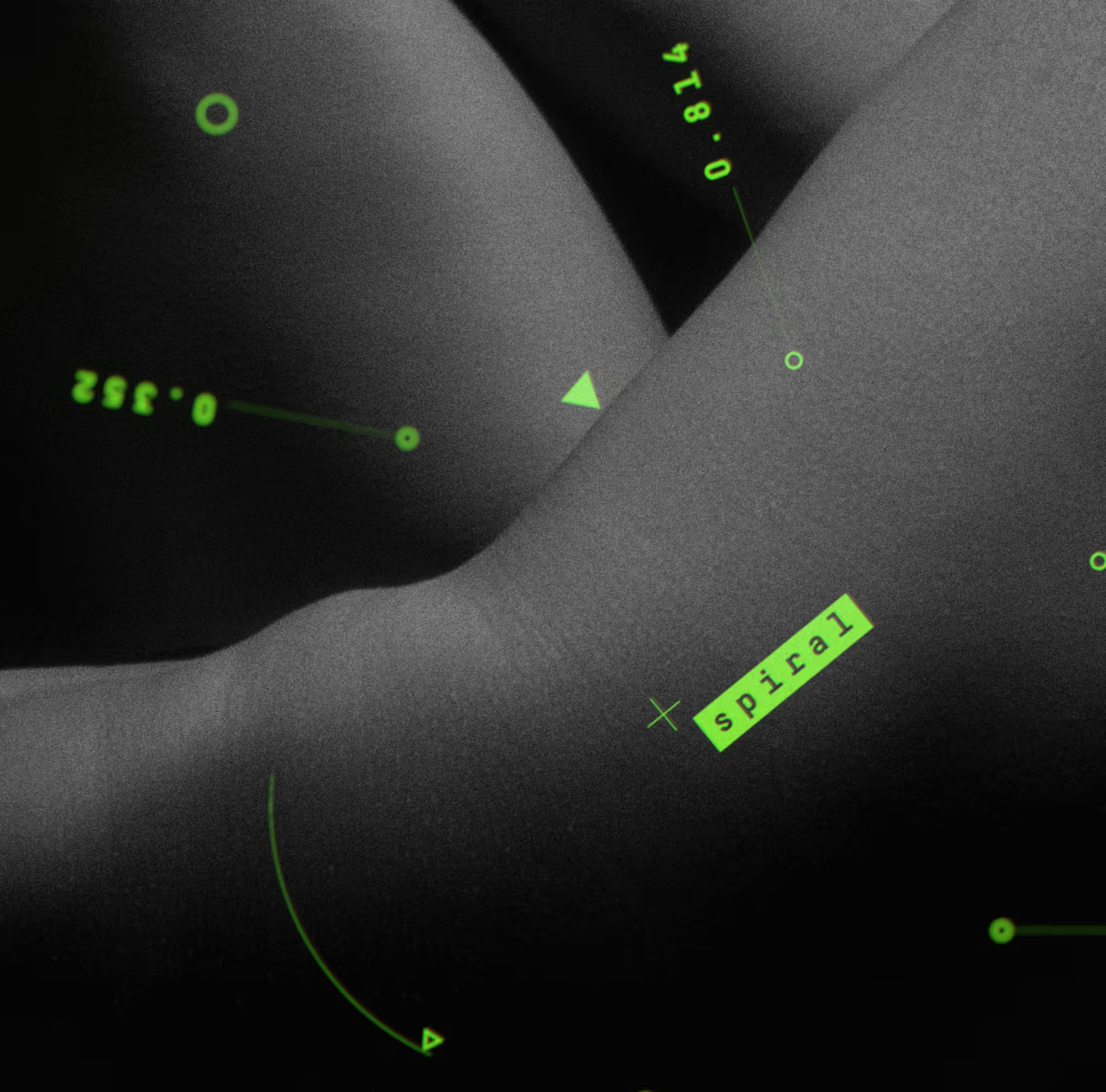

Body surface interaction

This concept explores a visual style and idea built around combining opposites: forms that are both flexible and alive, yet synthetic and structured.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

This experiment adds visual complexity to the AR layer, making it rich in detail without feeling overloaded. Every part of the hilt can be examined closely, with wireframe overlays and contextual explanations (if you can read Aurebesh!). The same gaze-based interaction model is used — parts are selected by centering them in the frame.

*

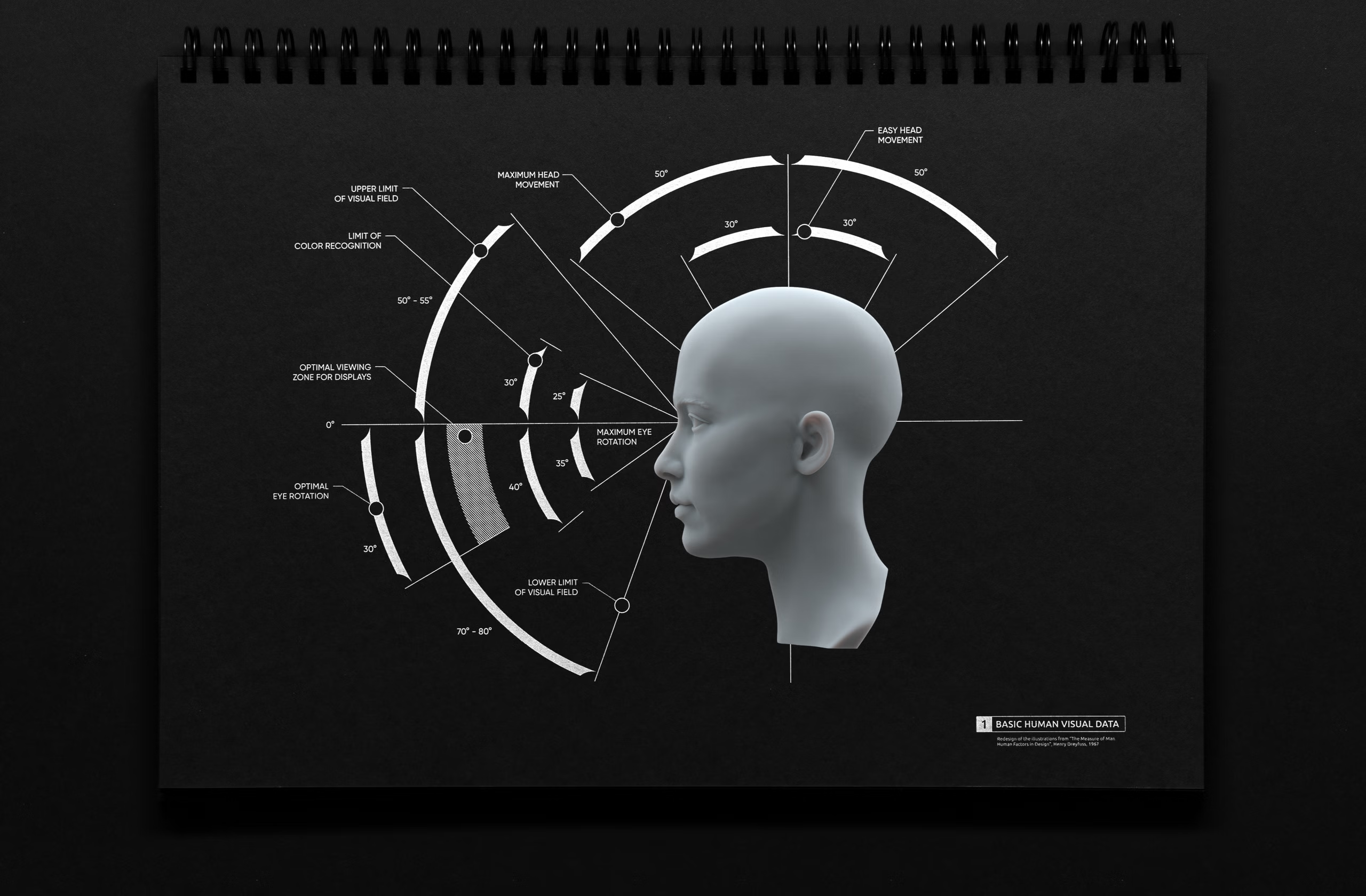

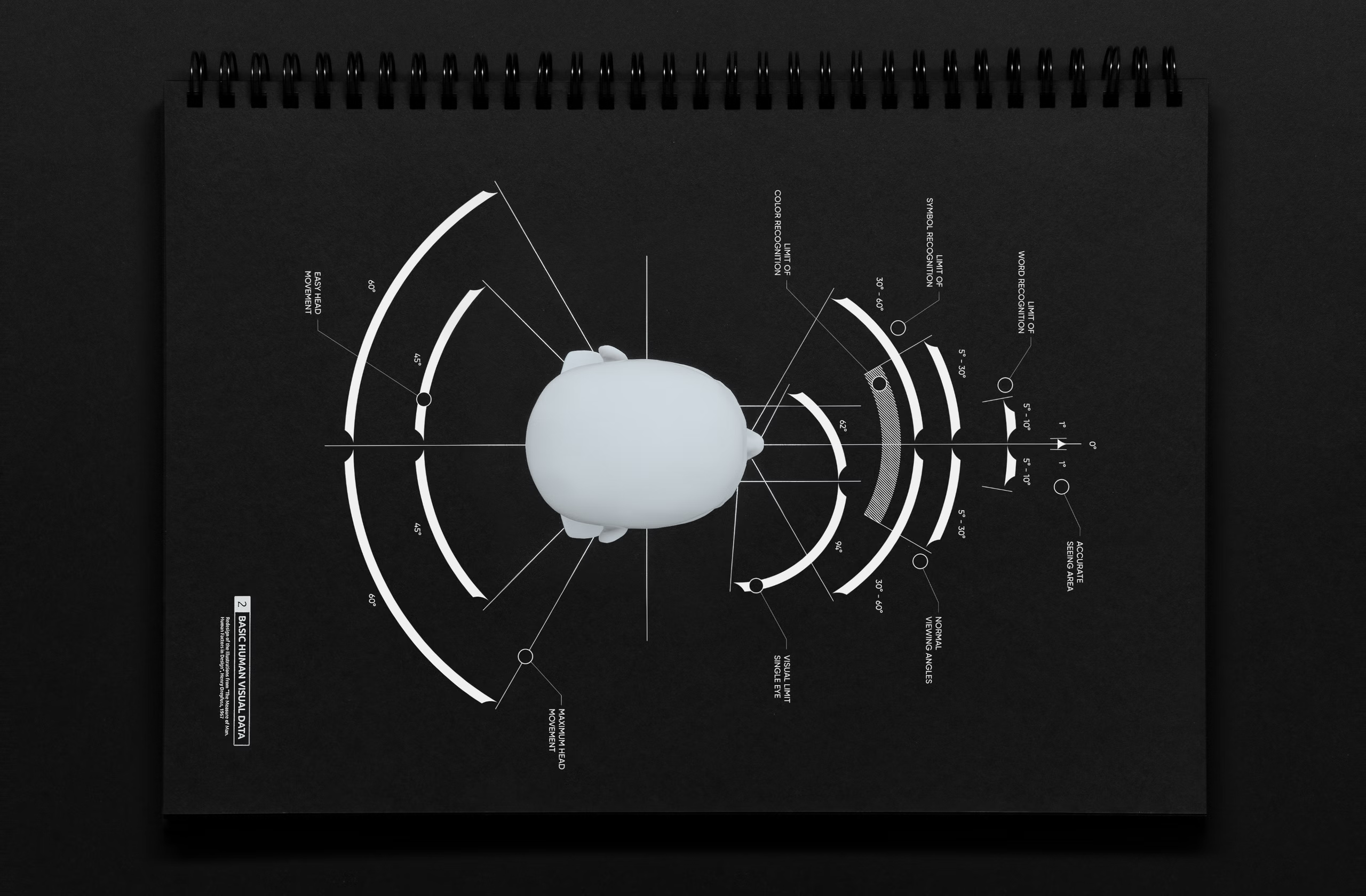

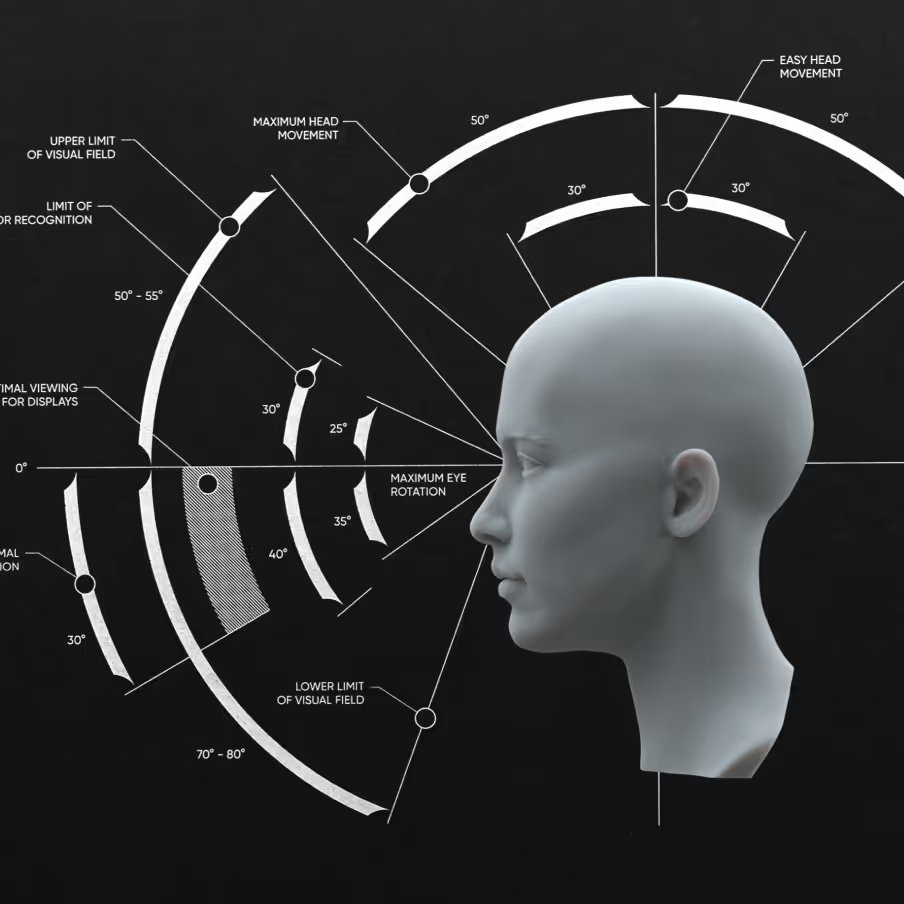

Redesign of The Projection Rules

This project is the result of a passion for design, measuring instruments, scales, and interfaces. It was created as a poster series intended for print.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

This experiment adds visual complexity to the AR layer, making it rich in detail without feeling overloaded. Every part of the hilt can be examined closely, with wireframe overlays and contextual explanations (if you can read Aurebesh!). The same gaze-based interaction model is used — parts are selected by centering them in the frame.

*

Lightsaber AR UI study 03

Use the Force (or hand-tracking) to reveal an exploded view of the hilt and discover what it’s made of. A handy feature for young Padawans — or just those who can’t read Aurebesh. This experience was created using a combination of model tracking and hand tracking in a single scene.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Use the Force (or hand-tracking) to reveal an exploded view of the hilt and discover what it’s made of. A handy feature for young Padawans — or just those who can’t read Aurebesh. This experience was created using a combination of model tracking and hand tracking in a single scene.

*

Realtime AR reflections and DOF

In this experiment, the goal was maximum realism—making the realtime AR UI feel seamlessly integrated into the live camera feed. The depth of field of virtual elements matches the real footage, and glowing UI components cast reflections and light onto the hilt’s metal surfaces. These details may seem excessive by today’s AR standards, but they’ll become essential once AR enters everyday life.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

In this experiment, the goal was maximum realism—making the realtime AR UI feel seamlessly integrated into the live camera feed. The depth of field of virtual elements matches the real footage, and glowing UI components cast reflections and light onto the hilt’s metal surfaces. These details may seem excessive by today’s AR standards, but they’ll become essential once AR enters everyday life.

*

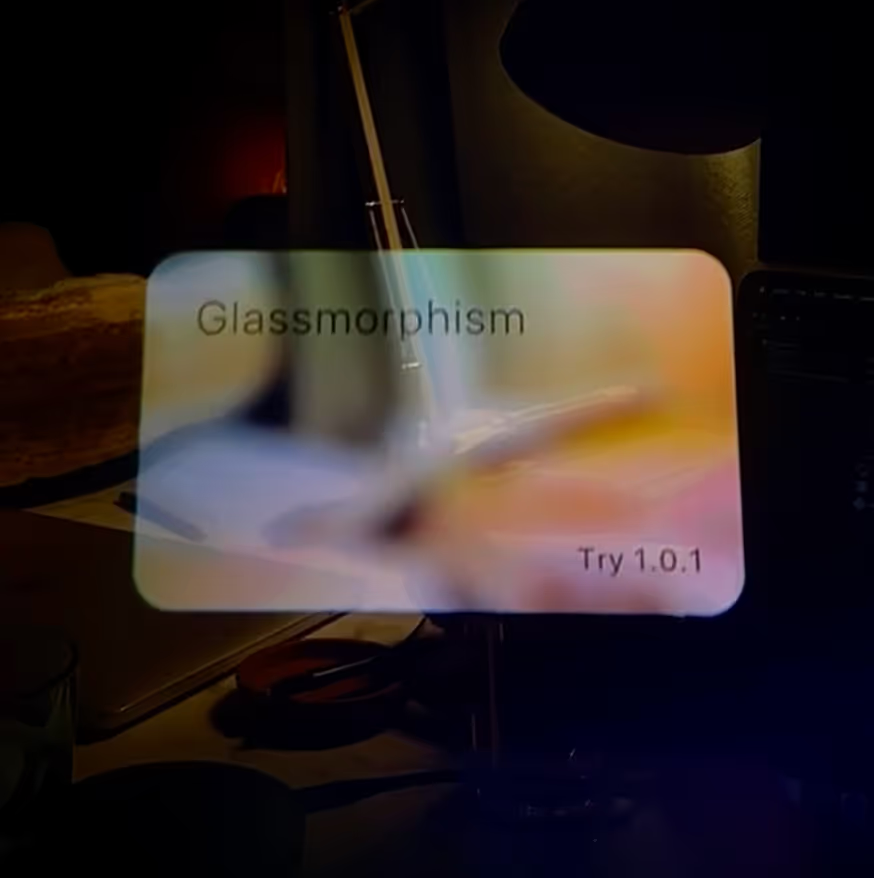

See through glassmorphism

Glassmorphism has become popular in recent years, and it’s evolving nicely in XR — especially with the arrival of Apple’s VisionOS. But on a transparent, see-through AR display, there’s no static background to blur. By using the device’s raw camera feed, however, we can still create a matte, glass-like effect. This experiment was recorded through the Magic Leap 2, and while the video can’t fully replicate how the blur looks to the user, it shows that glassmorphism is indeed possible on this type of display.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Glassmorphism has become popular in recent years, and it’s evolving nicely in XR — especially with the arrival of Apple’s VisionOS. But on a transparent, see-through AR display, there’s no static background to blur. By using the device’s raw camera feed, however, we can still create a matte, glass-like effect. This experiment was recorded through the Magic Leap 2, and while the video can’t fully replicate how the blur looks to the user, it shows that glassmorphism is indeed possible on this type of display.

*

Hand UI study 01

Hand-tracking exploration featuring a 3D, four-value dynamic chart — with rotation speed controlled by the thumb. Why would you need one in the palm of your hand? We don’t know yet…

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Hand-tracking exploration featuring a 3D, four-value dynamic chart — with rotation speed controlled by the thumb. Why would you need one in the palm of your hand? We don’t know yet…

*

Hand UI study 02

Hand-tracking exploration featuring layers of circular graphics placed between the index finger and thumb, transforming based on the distance between them. What does it do? Or mean? We don’t know yet — but it looks cool.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Hand-tracking exploration featuring layers of circular graphics placed between the index finger and thumb, transforming based on the distance between them. What does it do? Or mean? We don’t know yet — but it looks cool.

*

Hand UI study 03

Hand-tracking exploration featuring a HUD-like abstract UI that unfolds based on the available “field of view” — defined by the space between the user’s hands. An experimental way to reveal different levels of functionality depending on the user’s momentary needs and context.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Hand-tracking exploration featuring a HUD-like abstract UI that unfolds based on the available “field of view” — defined by the space between the user’s hands. An experimental way to reveal different levels of functionality depending on the user’s momentary needs and context.

*

David of Michelangelo typography exercise

Spatial typography doodle around David of Michelangelo. It’s impressive how precisely the model tracking occludes the glyphs behind the sculpture.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Spatial typography doodle around David of Michelangelo. It’s impressive how precisely the model tracking occludes the glyphs behind the sculpture.

*

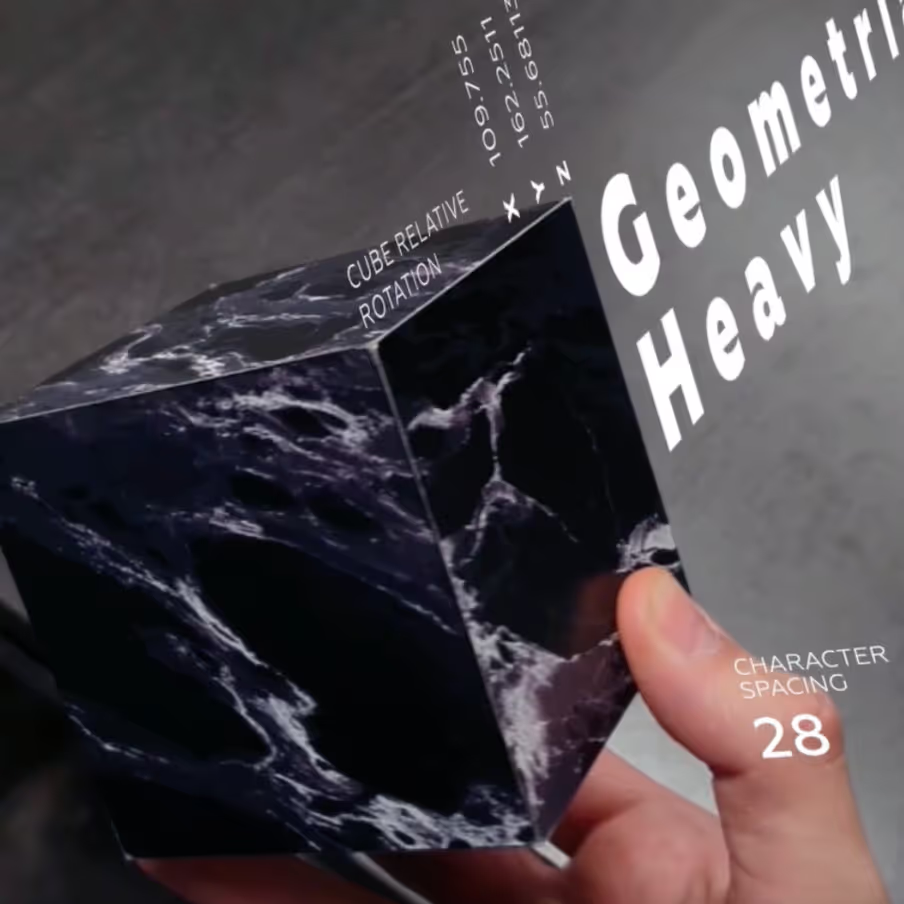

Spatial typography cube

The cube works great as a medium for testing and presenting typefaces in AR — from different angles, distances, and in motion. One neat trick we’ve learned: adjusting character spacing at extreme angles to maintain legibility. Check out the “Geometria” title for this effect.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

The cube works great as a medium for testing and presenting typefaces in AR — from different angles, distances, and in motion. One neat trick we’ve learned: adjusting character spacing at extreme angles to maintain legibility. Check out the “Geometria” title for this effect.

*

Checkerboard game selection

Two players, different games — same checkerboard. So why add extra menus when you can simply rotate the board to make a selection? Physical interaction just works in AR.

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

Two players, different games — same checkerboard. So why add extra menus when you can simply rotate the board to make a selection? Physical interaction just works in AR.

*

Checkers side selection

After choosing the game, why not rotate the board just a little further… and choose the dark side?

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

After choosing the game, why not rotate the board just a little further… and choose the dark side?

*

Checkers rules/advisor

When you’re not familiar with the game rules or hidden best practices, AR can reveal them for you. Just don’t let your opponent know your glasses are smart…

01.

Interiors of The Future Contest

At around, we developed this concept for the Interiors of The Future Contest hosted by ArtStation. As the name suggests, the challenge was to design a futuristic living space.

When you’re not familiar with the game rules or hidden best practices, AR can reveal them for you. Just don’t let your opponent know your glasses are smart…